The AI Data Scraping Is Getting Out of Control...

Companies like Bytedance, Meta and Anthropic are so desperate for data to train their latest models that they're basically DDoSing the entire open internet.

The recent release of China’s “Deepseek” AI has put American AI companies on notice. Despite America’s efforts to stymie China’s ability to develop quality AI models, including export restrictions on the fastest NVidia GPUs, which are commonly used to train AI models, they were still able to produce a model that rivals the best America has to offer at the moment. Various accusations have been levied against China about whether they intentionally dodged the import restrictions or stole training data from American AI companies, but the end result is the same. DeepSeek’s success in the face of adversity has put fear in the hearts of American AI companies who are now vigorously scraping the internet for anything and everything they can get their hands on, legally or otherwise.

If you haven’t seen my other post on hosting a Kiwix mirror, you may not know that I host a personal/family server with various services on it including Nextcloud, Minecraft and, in light of recent efforts by the current administration to censor free speech and scientific data that contains wrong-think, a Kiwix mirror. That means however, that I have some publicly accessible websites that require no authentication running on my personal real hardware here at home. I’m used to seeing traffic from things like search indexers such as Googlebot. That is a perfectly normal part of operating any website and with regards to the Kiwix site, it’s welcome. I want that site to be discover-able by people who need it. If a particular site or article gets censored or removed from the internet and I have a copy, I have no problems with a search engine directing somebody to my copy. That’s literally the whole reason I have it running in the first place. However, there’s a big difference between the reasonable amount of traffic it takes to index what a website has so you can make links available in search results, and scraping or ingesting the entirety of a site so you can use it to train your AI model.

For example. According to my webserver’s log file for 22 March 2025, the Googlebot scraper/indexer made 188 queries to my server. In that same period of time, Claude AI made 883,360. That’s over a 4,000x difference between Claude and Google.

iFixit recently complained that they too were having problems with Anthropic’s (Claude) AI scraper hammering their servers; stating that their servers were seeing in excess of a million requests per day from Anthropic alone, even more than I was seeing. I had noticed mentions of Claude in my logs for a week or two, but hadn’t paid it much mind, despite my stance on AI, because my logic was that if it helps somebody find information they’re looking for, then it’s a net positive. However, when the log file for my Kiwix software ballooned to 6.8GB in the matter of a day or two, I started doing some digging and that’s when I came across the article from 404 about iFixit’s issues with Anthropic and discovered just how much traffic they were generating on my personal server. That’s nearly 7GB of plain text generated by the sheer volume of traffic I was getting from AI scrapers ran by folks like Anthropic, Bytedance and Meta.

So I decided something had to be done. This wasn’t just indexing what I had to make it available in a search engine, this was blatant abuse of network resources. This volume of traffic can inflate log files, making it harder for me to diagnose actual problems if they occur and adversely affect the performance of a server that is intended primarily for myself, my immediate family and real life humans who want to browse the Kiwix mirror, not bots that will make millions of requests per day for data they’re just going to butcher and regurgitate on their own website (behind a paywall) because they got scared of some resourceful Chinese people.

My first step was to create a filter for a piece of software I use called “Fail2Ban”. Fail2Ban basically runs in the background and watches log files for specific types of information you specify, such as messages like “Access denied”, and then performs actions you specify, usually blocking an IP address in your firewall. This is commonly used to easily defeat basic cyber attacks, such as brute force attempts. Try and fail to log into a website too many times and the site will just stop responding altogether because you’ll get blocked at the firewall level for a set period of time. I did some searching and found some common “user agent” strings for AI bots and created my filter and jail files for Fail2Ban. A “user agent” is basically an identifier that is included in web requests. It’s how websites know what browser or operating system you’re using and can tailor themselves accordingly, such as automatically serving you the mobile version of a page if you’re using a phone or tablet. I decided to allow 5 requests within a 10 minute window. My logic being that this would allow them to get “something” if it was done in real time to respond to some search query, but not hammer the server all day long.

Almost immediately my email inbox started filling up with notifications. See one of the things Fail2Ban does by default is it will e-mail you whenever somebody triggers a ban. This is useful in case of accidental bans, or to let you know if a particular service is being targeted by an attacker.

I let it run for a while and the emails just kept coming, more and more every minute. I started reading the messages and they all had the same handful of user agent strings; Claudebot, Bytespider and Facebook, over and over and over. It became apparent that what was happening was as soon as one IP address got banned they would just switch IP addresses and try again. Facebook seemed to be mostly using their own infrastructure, but Claude (Anthropic) and Bytespider (Bytedance/TikTok) seemed to be mostly routing their traffic thru virtual servers hosted by people like Amazon, giving them access to a much wider array of IP addresses to switch to.

This worked to slow them down, but it didn’t stop them. At the new rate they wouldn’t be able to issue over a million queries a day simply because of the time it took them to issue a 6th request, realize they had been blocked and then move the task to a different IP address. However, I was still basically getting DOS (Denial of Service) attacked because my log files were being spammed and my inbox was overflowing, to the point I had to go digging for the normal emails I get in the morning when my daily maintenance scripts run. So, further action was required to get this under control. They weren’t taking the hint that I did not appreciate their attacks on my server. Their attempts to circumvent my protection measures by switching IP addresses really made it feel like I was on the defense against a cyber attack being launched on my personal home server by three competing billion dollar artificial intelligence companies who are desperate to get new training data however they can.

So after 72 hours or so of just non-stop nonsense I decided to make a few changes. Remember, this has no affect on a normal human, or even other search indexers that I didn’t explicitly specify. The server is looking for markers that are specific to these AI bots and only applying these rules and blocks to that traffic. So far for example, Google’s “Googlebot” has been respectful and reasonable, so I’ve left it alone.

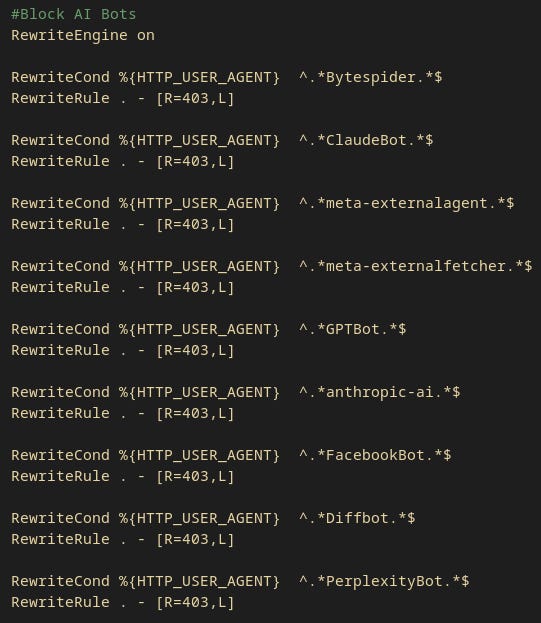

First I reduced the number of attempts an IP address could make to 1. Second I configured the webserver software itself to look for those user agents and instead of serving them even the 1 file they asked for before getting banned, to give them an “Error 403” which means forbidden. Previously, even though they were eventually getting blocked, they were actually getting the files they asked for, so they could basically download 5 files, get banned, switch IPs, get 5 more files, rinse and repeat. I configured the server to stop giving them any files altogether so that upon making even a single request they would get issued a “403 - Forbidden” message and then immediately get blocked by the firewall.

I also increased the amount of time an IP address gets banned from a week to 4 weeks. After about 3 days without slowing down I started to consider the possibility that IP addresses would start to get automatically un-banned, therefore opening them back up to be used for continuing the assault. I don’t want the bans to be permanent since many of them are from Amazon, which hosts a variety of services for a ton of third party companies, so a particular IP address issued by Amazon may not belong to somebody like Anthropic permanently.

Finally, I added a line to my jail configuration to stop Fail2Ban from emailing me for that particular jail whenever somebody got banned. At last count, from Sunday to Wednesday, I had received 4,508 emails from my server, all of them pertaining to unique IP addresses that had been banned for playing host to an AI scraper specified in my list. As of writing this post Fail2Ban is reporting 5,246 unique IP addresses that have been banned.

5,246 over the course of now 5 days is a lot better than over a million per day, but a lot of the reason for the slow-down, at least for the first few days, is because of the time it took for them to realize they were being blocked and actively take measures to circumvent that block and try again. And that’s still multiple times the number of requests Google is making without being blocked. It also demonstrates that despite being blatantly being told “No” with every request, they are STILL switching IP addresses and trying again every time they get denied. It shows a blatant disregard for basic netiquette. In their desire to scrape anything and everything, not only are they pirating content they can’t legally acquire but they’re overwhelming internet infrastructure and throwing basic decency out the window.

See something else to keep in mind is that there’s a reason I chose to respond the way I did. See normally if you host a website and you don’t want a search engine like Google or the Internet Archive to index your site, you can place a small text file in the root folder of your site named “robots.txt”. Within this you can place a variety of directives, a common one being to just block search engines from indexing that site.

There’s a problem with this however. This is not an active defense mechanism. It’s the internet equivalent of asking nicely. Scrapers and indexers don’t have to obey what you put in here and there’s no software enforcement mechanism for whatever rules you put in there. It’s not like an authentication mechanism or a CAPTCHA that actively blocks access.

This has been mostly fine for ages, with the act of disregarding “robots.txt” being rare and severely frowned upon. Today however, with folks like Anthropic blatantly disregarding passive measures like this in order to maximize the amount of data they can hoover up to feed their hallucination machine, you might as well not even bother putting a “robots.txt” on your webserver. If you don’t want your site scraped, don’t make it public in the first place.

So what’s the solution? Well ideally folks would stop paying for AI products in the first place. They are essentially DDoS’ing (DDoS = Distributed Denial of Service) any publicly accessible site they can find, stealing copyrighted data en masse and either making stuff up out of the blue, or regurgitating some bastardized, maybe accurate, maybe not, form of their training data. They’re being disrespectful in their disregard for long held standards of behavior on the internet and most of the models and algorithms are completely proprietary, so there’s no way for an outside third party to audit what’s going into these things and how they operate. The best we can do is try to use the consumer facing front-ends presented to us to tease out whatever information we can. These folks are taking everything I complain about in the page I wrote on my personal site about AI and are cranking it up to 11.

Second, if you host any kind of webserver, you may want to double check your security settings, tighten things up a little and do what you can to starve these bots of useful data, especially if it’s personal, copyright protected, etc., because they do not care. They will not respect your polite requests to not be indexed; the only thing that works is active countermeasures to block their access to your site, and they’ll try to circumvent those if they can. They just want data, however they can get it, and if that means issuing over a million requests a day to valuable resources like iFixit, or even to my small personal/home server, that’s what they’re gonna do.

While I have found “AI” summaries of search results on sites like Brave search useful from time to time (mostly because it’s right there at the top of your search results page by default), they’re also wrong a good portion of the time and I end up having to visit a real website anyway. I have seen nothing since writing my original piece to change my stance on AI, and if anything, their behavior this past week has hardened my resolve against the general purpose use of generative AI. Even Hayao Miyazaki, one of the greatest artists and storytellers of our time, who once called generative AI “an insult to life itself”, has been essentially plagiarized by OpenAI.

The AI crap is getting ridiculous and it’s up to those of us who have been on the internet long enough to remember when DDoS’ing people was considered rude and/or illegal to do what we can to fight back and protect our infrastructure and those who use it.

I’ll end this post by para-phrasing a post I saw on social media some time ago, though I can’t seem to find it at the moment. If I do I’ll update this post with a link and/or screenshot.

“I don’t want a robot that makes art and music so I have more time to do house chores. I want a robot that does house chores so I have more time to make art and music.”

Donate

If you’d like to donate to me, you can either become a paid subscriber thru Substack, or you can use one of the following methods.

PayPal: https://paypal.me/gerowen

Bitcoin (BTC): bc1q86c5j7wvf6cw78tf8x3szxy5gnxg4gj8mw4sy2

Monero (XMR): 42ho3m9tJsobZwQDsFTk92ENdWAYk2zL8Qp42m7pKmfWE7jzei7Fwrs87MMXUTCVifjZZiStt3E7c5tmYa9qNxAf3MbY7rD